NOTE: This blog post references HPE as our example but is relevant to other storage vendors out there. I am in no way affiliated with HPE 😉

Back in the day, “when I was a lad“, the recommendation for SQL Server was to split your data, logs and tempdb files onto separate drives/luns to get the most out of your storage. Jump forward to 2021, is this still relevant and should I be splitting my SQL Server drives on to separate luns on new SAN storage? A question which is often asked not just by customers as well as their 3rd party managed service providers / hosting companies. This question can also be along the lines of, “Why can’t we just put everything on a C:\ because the backend is all on the same lun“. This is slightly different as they’re questioning the drive lettering more than creating separate luns but still relevant to this topic.

The majority (not all) of SQL Servers will have a SAN to host its storage and SANs these days are super duper quick, especially those that have tiered SSD or even fancier, flash storage. The technical differences between the older spinning rust and the new fancy dan flash storage is not something we’ll delve into as there’s plenty of other blogs out there and not really the scope of this blog post.

Each storage vendor will (should) provide their own documentation specific to how the SAN should be configured for SQL Server to get the best bang for your buck. Taking HPE as an example, they have pdf’s for their various offerings including 3PAR/Primera as well as Nimble. Although there are some slight differences, each of them suggest that you SHOULD split your drives onto separate volumes.

I won’t disect the documents in their entirety but some of the sections which will help with answering the question but these mostly relate to which performance policy to set for your data, logs and tempdb based on the workload (ie. OLTP / OLAP and size of files):

- Array Snapshots and remote replication

- You may not (won’t) want tempdb as part of this due to its large rate of data change

- Volume Block Size

- According to the documentation, depending on the workload, you may (or may not?) want 8kb for data and 4kb for logs as per their default policy

- Caching

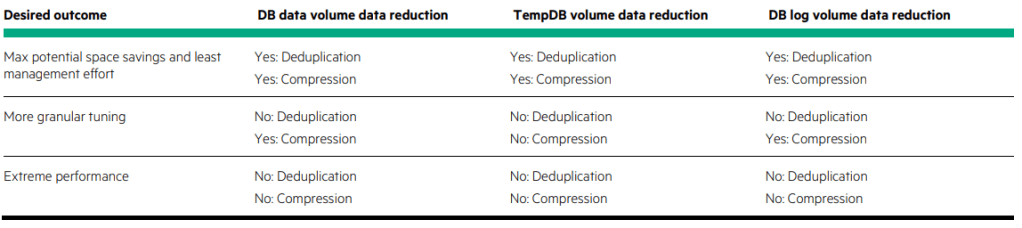

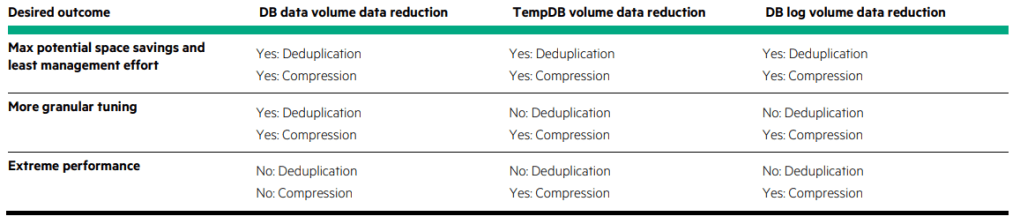

- Deduplication

- Compression

- Number of disks available

To provide a great overview, below is a snippet from the HPE Nimble recommendations:

Storage Layout for SQL Server Volumes

In general, using multiple volumes for SQL Server deployments offers several advantages:

The ability to apply custom performance policy settings to specific volumes

• The ability to control cache use for volumes on adaptive flash arrays

• The choice of limiting replication of certain volumes, such as the tempdb volume

• A gain in performance for I/O-intensive databases, especially if the queue depth of a device is at risk of

becoming saturated

• The ability to group databases in separate volume collections and apply custom backup and replication

schedules

• The ability to quickly recover specific databases with volume-based restores

Before designing the storage layout, carefully consider the administrative overhead of managing multiple

volumes. The ideal solution provides a balance between optimization and administration.

Allocation Unit Size

Something that often comes up during these conversations is the configuration of the volume formatting. Regardless of the chosen Performance Policy and indeed volume block size, the default recommendation from HPE is to use 64kb for your data, logs and tempdb. This is a recommendation, only testing for your specific environment will truly give you the answer as to what allocation unit size to set.

Additional Information

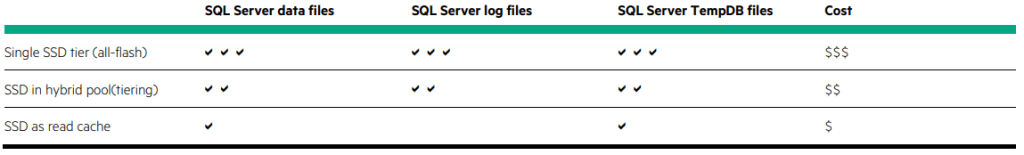

Below are some further snippets from the HPE documentation regarding default configurations:

Comparison of SSD / tiering uses for SQL Server files:

Conclusion

Should you split your SQL Server drives in 2021? The Nimble documentation gives a sentence which sum’s it up very well:

The ideal solution provides a balance between optimization and administration.

Having everything thrown into a single pool will make life a lot easier for the SAN guy, splitting things up could lead to an administrative nightmare but may be required to get the best out of it for your workload.

What I will say is, review your storage vendor’s documentation / recommendations, compare it with what sort of environment / workload you have and if it fits your specific setup, configure it, test it and use it as a baseline to compare with other configurations.

If testing proves that their guide/recommendations do not provide optimal performance for you environment then it is perfectly fine to deviate from their suggested recommendations, as long as the admin overhead is manageable. All I would add to this is that whatever the configuration decision made is, make sure it is fully documented as to why this has been done as someone several years later will no doubt ask the question as to why 🙂

One thought on “Should I split my SQL Server Drives on a SAN in 2021?”

1 Pingback