tldr: Over the years I’ve read a lot of blog posts and watched a lot of videos where they mention that you should have your tempdb files all the same size. What I haven’t seen much of (if any) is what performance impact you actually see if they are not configured optimally. This blog post aims to address that 😉

Introduction

We had a customer experiencing significant performance issues leading to application timeouts (30s) so called on Datamasterminds to investigate. Although this wasn’t a constant performance issue and only ever seen in extremity very infrequently, we were fortunate enough that they had invested in a SQL Server monitoring tool (SQL Sentry) which captured the historical performance.

Analysis

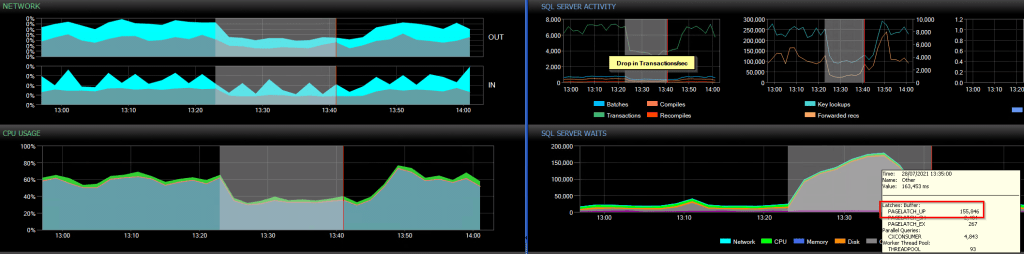

Looking at various noted dates/times where they had encountered performance issues and an example is shown below:

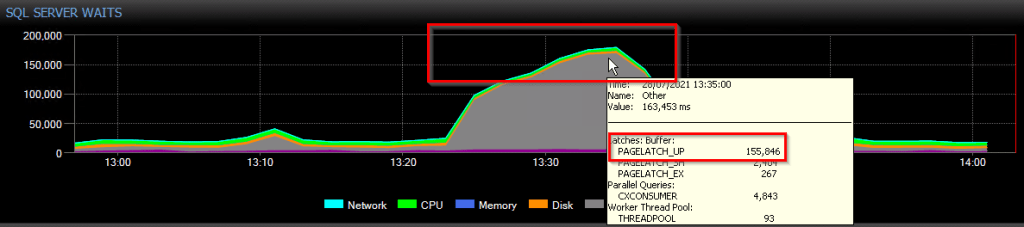

It is obvious to see that it wasn’t pretty during these times, very high PAGELATCH_UP (tempdb PFS) and the dreaded THREADPOOL waits can be seen so we got to work looking at setting up additional monitoring and analysing the database(s) and queries which were running at the time. Long story short, this lead to there being a select few stored procedures creating/dropping a lot of temporary objects and in some cases running a very high number of inserts/deletes to them in loops/cursors. With the queries continuing to come in, the wait times get higher ultimately leading to them being queued and eventually hitting the 30s application timeout.

A good explanation of what Page Free Space (PFS) is can be found over at microsoft docs.

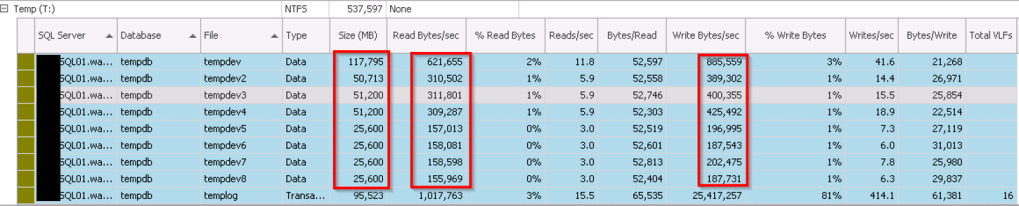

At the time, there were 8 tempdb files but all are different sizes so the usage was skewed. This is because of SQL Server’s proportional fill algorithm where it will try (more often than not) to write to the file with the most free space. In this case, as file id 1 was significantly larger (117GB) than any of the others (25-50GB) so it was the “defacto standard” when writing to tempdb. Ultimately, causing the contention we were seeing.

Tempdb Usage during high wait times can be seen below (taken from SQL Sentry), note the variation in Read/Writes to each file as well as the size differences:

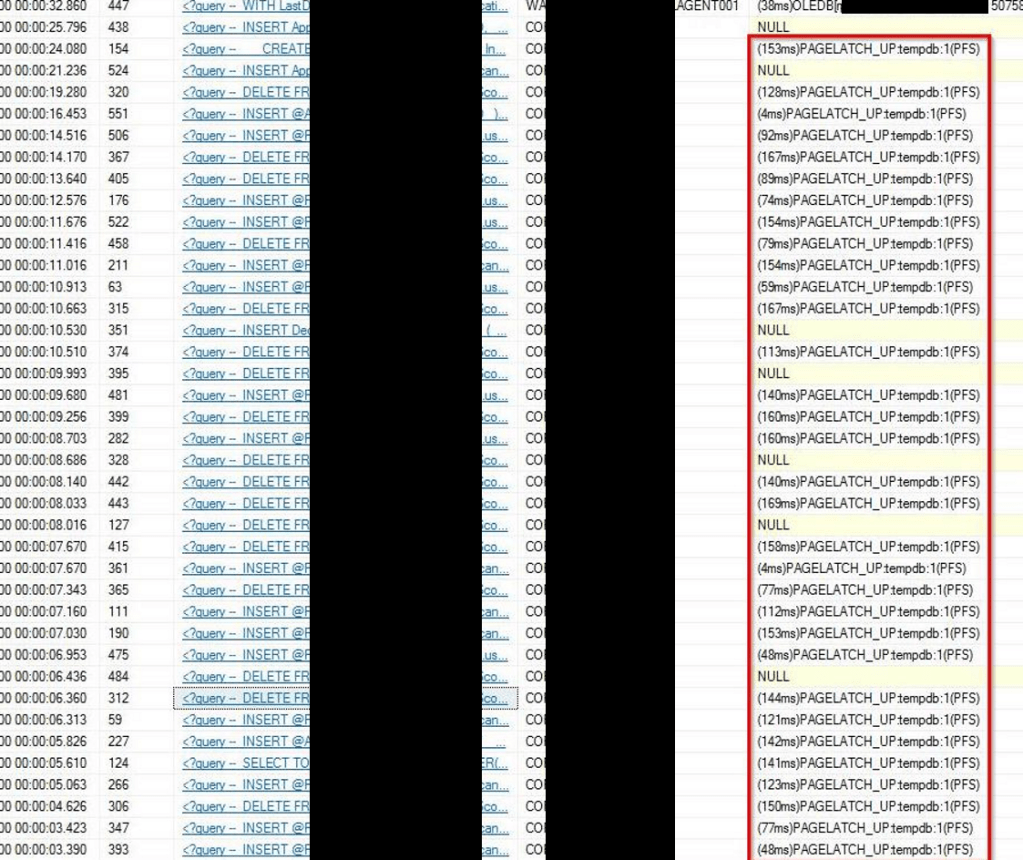

Below is some of the output from sp_whoisactive during the high PAGELATCH_UP wait times. You will see the majority relate to the INSERTS and DELETES to temporary objects…..all in tempdb file id 1.

NOTE: This is just a snippet of the output, the number of queries was in the 1000’s 😲

The Fix

The interim fix was very straight forward, simply resize the tempdb files to be the same size and the proportional fill algorithm worked far better 💪

We’re still working with the customer on the performance tuning efforts to reduce resource usage and contention seen throughout.

The following list from microsoft explains how increasing the number of tempdb data files that have equal sizing reduces contention:

- If you have one data file for the tempdb, you only have one GAM page, and one SGAM page for each 4 GB of space.

- Increasing the number of data files that have the same sizes for tempdb effectively creates one or more GAM and SGAM pages for each data file.

- The allocation algorithm for GAM allocates one extent at a time (eight contiguous pages) from the number of files in a round robin fashion while honouring the proportional fill. Therefore, if you have 10 equally sized files, the first allocation is from File1, the second from File2, the third from File3, and so on.

- The resource contention of the PFS page is reduced because eight pages at a time are marked as FULL because GAM is allocating the pages.

Hopefully this blog post gives you an insight into what sort of issue you can see if you don’t take the advice of Microsoft, Consultants or indeed anyone telling you to size all your tempdb files the same 🤔

One thought on “tempdb – size matters!”

1 Pingback